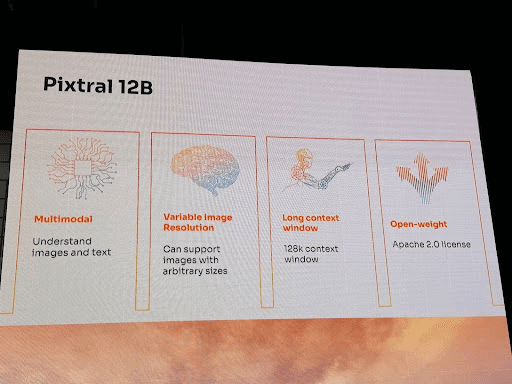

Mistral AI has released its first-ever multimodal model Pixtral 12B that can process both text and images. It is built upon their existing Nemo 12B language model. This 24GB model is freely available for download and fine-tuning under the permissive Apache 2.0 license. The model boasts a .

Key Highlights:

Versatile Image Handling - Pixtral 12B natively supports images of arbitrary sizes and resolutions, processing them quickly regardless of dimensions. It excels at handling both small images and complex, high-resolution images, including those requiring OCR.

Long Context Capabilities - The model can process an arbitrary number of images within a single prompt. It also boasts a 128k context window to take in substantial text and visual information.

Strong Performance - Benchmark results show Pixtral 12B outperforms opensource models like LLaVA and Phi-3 vision on key tasks, including MMMU and MathVista) and multimodal question answering (ChartQA, DocVQA, VQAv2). It also strongly competes with closed models Claude 3 Haiku and Gemini 1.5 8B.

Open Source - The model can be easily downloaded via GitHub and Hugging Face under the Apache 2.0 license. Pixtral 12B will soon be accessible through Mistral’s Le Chat and Le Platforme.

Reference: https://x.com/MistralAI/status/1833758285167722836?utm_source=www.theunwinda i.com&utm_medium=referral&utm_campaign=mistral-ai-releases-opensource-multimodal-model